2001-2010

+++++++++++++++++++++++++++++++++++++++++++++++++++

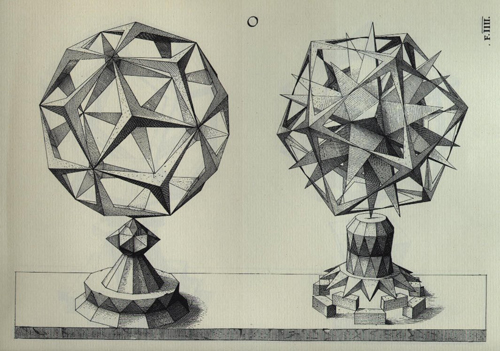

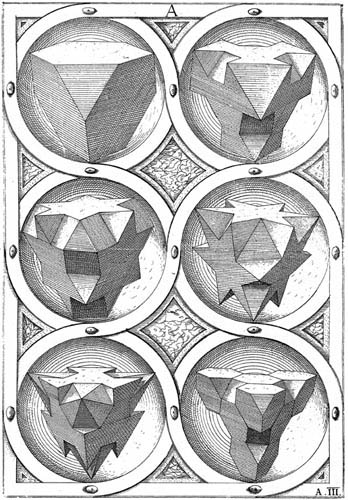

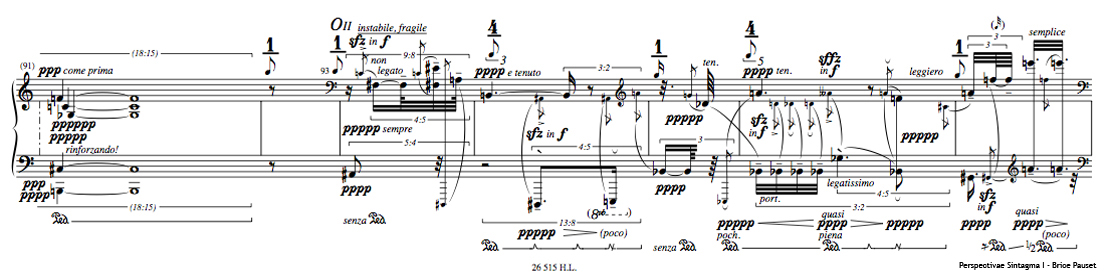

Perspectivæ Sintagma I (literally “Perspective Treatise”) is a diptych particularly relating the treatment of musical time. The subtitle “canons” indicates that a number of compositional techniques of the past – especially medieval time – are a deep inspirational source. The relationship of these techniques from the past is of course subject to historical and critical objectification. It applies to areas of temporal activity. They were previously separated by measure, metric, rhythmic… The terrible fantasy evoked by Leopardi in Zibaldone, cited by Massimo Cacciari in “geometrizing his entire life” resonates with the Jamnitzer Wentzel‘s elusive figures engraved in 1568.

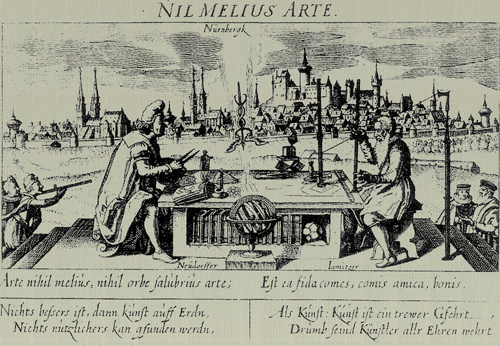

Perspectiva Corporum Regularium (Perspective of regular solids) is published in 1568. This book is remembered for its engravings of polyhedra. It is based on Plato‘s Timaeus and Euclid‘s Elements, and it contained 120 forms based on the Platonic solids.

You can get the 1568 edition here (latin – jpeg from dresden sächsische landesbibliothek).

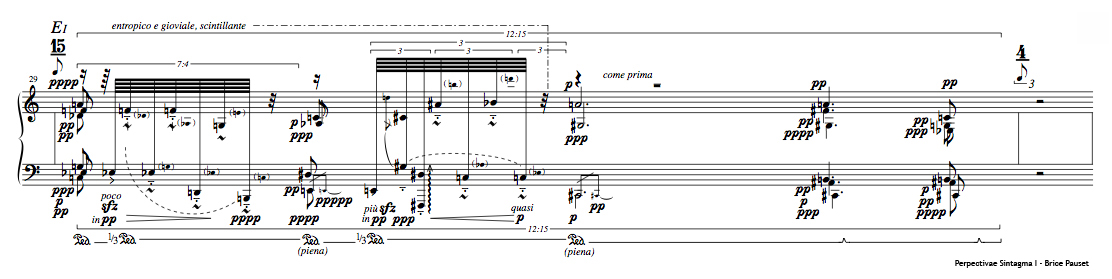

The importance of perspective affects many areas of Perspectivæ Sintagma I: the consubstantial prospect to the canon, of course, but also the possible prospect between the writing and the interpretation. During the execution of the piece, the pianist is constantly compared to the ideal score, geometric, stored in the computer. The tiny gaps between the interpreter and the actual partition are inserted in real time into a compositional algorithm. This system replicates the same synthesis process as it is in the written score. In a sense Perspectivæ Sintagma I is the true subject of canons, whether written, interpreted or re-evaluated while being played.

|

|

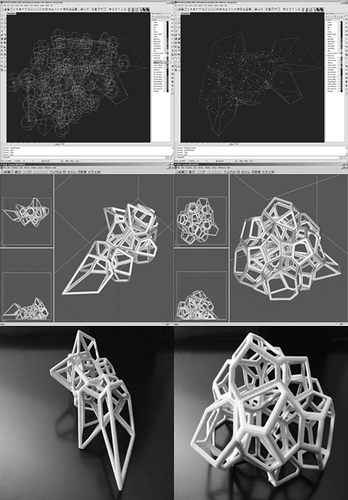

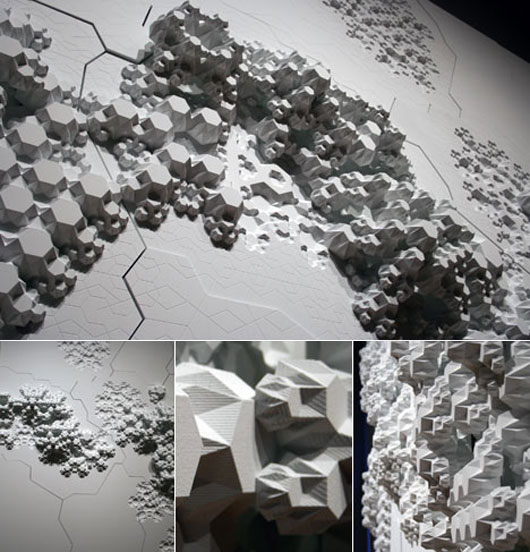

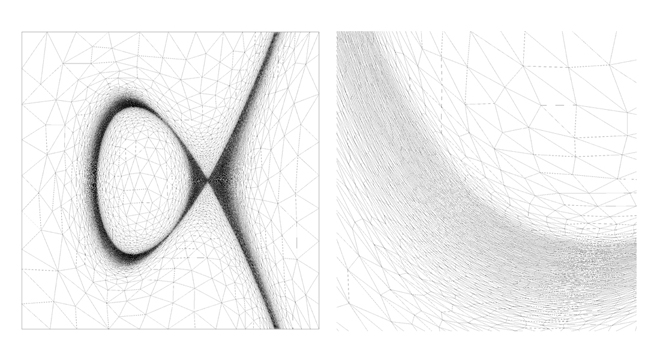

The question of perspective is a starting point for many generative processes in architectural design and “modern” composition. This is not only a historical question, it is also a question of perception. Edges of perception either musical or material are often joined by points. Those points can be notes, visual edges in an image, real obstacles, measures etc… The interconnection between those edges can eventually be marked out with what makes the intersection between two non parallel strait lines : dots. Perceived position of these points differs with the situation of the viewer. Forced perspective is one of the many techniques controlling spacial perspectives. Why not using such distortions with the time dimension. After distorting all spatial dimensions and time, there is a next step. It is also possible to play with discrete spaces and times : scales and dates. A particular process is done at a specific date and place. Spacial and time distortions would then be the result of the order and length of processes themselves. This concept is widely used in nanotechnologies and almost all multi-agent systems (see my Sound Agent project). Architects Aranda & Lasch demonstrate this with their art piece Rule of six. With Kaspar I also show something similar playing with scale relativity theory. Generative rules change depending on the observed scale, the point of view and time. Conceptual connections are made between one rule and another. Astrophysicist Laurent Nottale wrote an interesting paper about this.

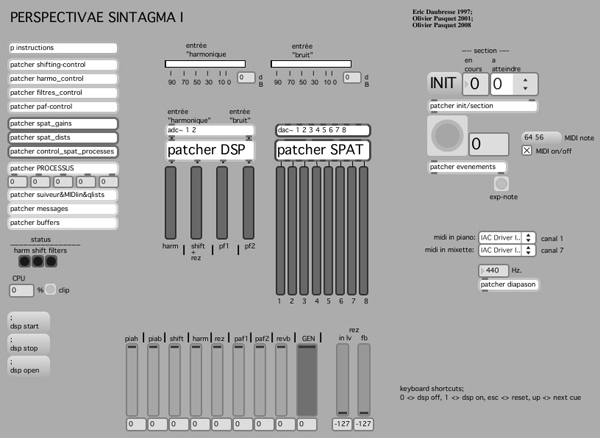

Four main subjects are involved in the electronics. They are the most usual subjects found in the computer music chain: score follower, various real-time transformations, spatialization and, canonic generation and synthesis. When premiered, everything was running on a NeXt computer with the additionnal Ircam dsp harware called ISPW.

Three different score followers have been used in the history of the piece. The oldest one was used for the first representations of the piece. It uses the Max object explode (then became detonate). It was then replaced by another system I built myself in the early 2000’s. That system does not need to try to follow all notes but only the most relevant ones. Later, in the early 2010’s, it was replaced by another more advanced system called antescofo.

Live transformations are rather basic but they also have a monumental historical meaning. They are ring modulation, frequency shifting, harmonizing without spectral envelope respect, delays and resonant filter banks. There is a huge interest with the fact it is historical. For instance, ring modulation and frequency shifter has widely been used during the early ages of electronic music. Moreover, it has become a real myth when used by a piano. Mantra from Karlheinz Stockhausen is an amazing example for two pianos. The control of those effects is rather rich and rhythmically precise. This means a huge database of events and parameters is stored in the program. Play a frequency shifted piano to an electronic musician, he will love it.

The voice and the piano are in the concert hall. Each note or sound event can be envelopped and placed in the space. A very special system is used: spatialization by enveloppe. Instead of being placed and moved in space, the sound source is send to all the speakers and enveloppes control the gain for each speaker. If these enveloppes where pediodic, we could say Low Frequency Oscillators are used. The opening for each speaker is rythmically controlled. Each enveloppe can be affected or interpolated by its speaker affectation, its form, its attack, its duration or its overlap with the other enveloppes. This technique is very efficient if precisely synchronized with the acoustic instruments. For instance a pizzicato in the middle of an orchestra could be reversed by an enveloppe and sent to a specific speaker. When envelopes are played fast, they start to be heard and can make a spacial granular synthesis. After years using this technique I found it very close to some architectural design tessellation techniques. This is the reason why I am using it for the audio part of my form projects.

I often say spatialization for sound reveals fantasies when we are not dealing with cinema, gaming or field recording approaches like ambisonics or wfs. Psychoacoustic limits are quickly reached so precisely placing objects at a specific place is often just a conceptual interest. The effect is even more enhanced in traditional concert situations where nobody in the audience hears the same thing. Listeners only hear vague movements that can be more or less magnified. Therefore, it is rarely useful to precisely place sound objects in space, especially for mixed music with additional acoustic instruments on stage placed in the front. Spatialization by envelope is another approach that does not position sounds in space. It is just question of spatial stimuli for all listeners. We do not care where is a single sound source. Only the relation between events giving a precise overall idea of space is important. This is particularly the case when there are a many events like a full orchestra in the same projection hall.

It is possible to make traditional panning with these envelopes if they have the right form and if they overlap properly. Perspectivæ Sintagma I is also using a more traditional spatialization system : panning

Rather using advanced effects, traditional transformations are applied to instrumentalists. They are rather more historical than traditional. This historical issue is very interesting and powerful because it adds cultural references to the effect itself. Historical does not go against modernity. In this piece the latest signal processing techniques are used. For instance, in 2010, it is still difficult to make proper transpositions in acoustic terms (by keeping the spectral envelope).

A precise synchronization between machines and musicians is very important for three reasons. The music score for the acoustic instruments is tightly written. Also spatialization needs a tight synchronization if we want a proper use of its capabilities. Finally, it is a conceptual and aesthetical choice. Indeed, if the score deals with micro-events close to the perception and play-abilities, why not doing so with the electronics and the limits of computers. On other pieces, I have used various types of synchronization. In some cases, there are so many events it is difficult and pretty risky to use real cues (“go”, trigger, discrete events). Instead, I still use a click track where no triggered cues are needed. The electronics is like a tape; the only realtime component is the audio stream. Conductors or players hear (ear monitor) or see (laptop) a tick giving beats or decomposed beats. An automatic score follower could be used in an utopic case or with a simpler score. For instance something like antescofo from Arshia Cont. It would not work with that level of complexity but I believe it will come soon. Using a click-track brings some problems. For instance the musical result sounds very mechanical and very tiring for player who end thinking they are just machines. Instead, I have decided to use an original type of synchronization in order to keep the advantages of a click-track. Every bar or defined event can be triggered by hand. If it is not triggered, the electronics keeps its own timing. If it is triggered, a comparison between the time of electronics and the time of the instrumentalists is done. Then the entire electronics, including sound files, will be played faster or slower to catch the instruments. Finally, the electronics will be played with the new found tempo.

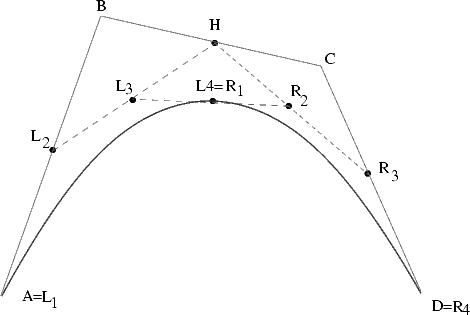

Introductions are essential when starting the canon. Indeed, thanks to the score follower, the computer is if the interpretation of the pianist is “fair”, or rather ahead or behind the expected pace. The data collected are negative numbers if the pianist is in advance, equal to zero when the right tempo, positive if it is late. These numbers form a series, for example: (-5 1 -4 2 6 4). This series is used by the computer to generate the duration of its response to the topic proposed by the piano: the positive values give durations, negative values of silence (the number 0 is read as 1). At this point comes into play a concept that is found throughout the room and at all levels: targeting. This means that the computer simply does not read this series and, more importantly, it does not take as a starting point for a serial development. Instead, he uses it as a target as a point of arrival. Thus, the computer designs, with these numbers, permutations to find the order of the “parent series. It should be noted that the permutations are not governed by mathematical logic, but they are the result of chance.

Every permutation is a measure. Therefore, each set is decrypted in proportion to the duration of each measurement. The voice of the computer always starts a measure after the piano, which explains the final section recovery (w). The timbre of the voice is formed by synthesized sounds very similar to the piano (it lacks the attack transients). It is therefore a two-part canon similar (but not quite equal), the second change of rhythmic profile in each run, according to varying parameters dictated by the vagaries of interpretation: that is why we talk about random gun.

Permutations read by the computer determine the durations but do not indicate the notes of responses. These are taken from a line in the piano score, or better, they represent only a few notes inserted in a script designed on several levels.

If you found this article interesting, you can have a look at Symphonie III, Exercices du Silence, Perspectivæ Sintagma II, Perspectives. Also, for further details, the Ircam revue ‘inoui’ provides an excellent article.